Collection engine Tornado

The beginning of Big data processing can be described as the generation or collection of data. In a traditional database (DB) environment, the data is generated in an application and DB’s front end, instead of being imported from outside and from processing initiates. Meanwhile, in big data, the data is brought in from outside and from the processing initiates, instead of being generated internally. The data processing starts from the data collection in the big data environment.

Introduction

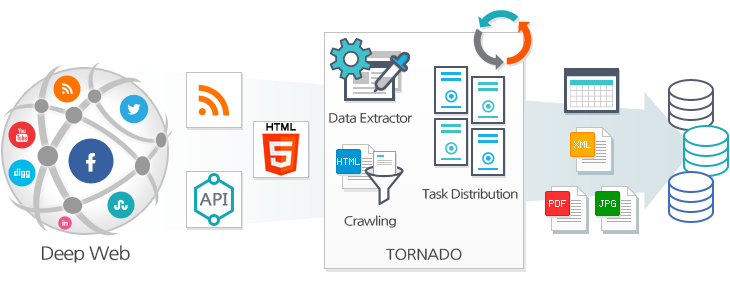

Saltlux‘s Big data Suite collection engine (Tornado) is both active and passive approach. It is a strong big data processing engine that could perform a real-time automatic parallel collection of users’ preferences from big data that are generated in various industries such as deep web, SNS, shopping sites, IoT, and streaming data. TORNADO provides an optimized big data collection environment for real-time analysis of social big data, competitors, markets and products, risk management, and customer voice recognition.

The engine can perform data loss and duplication prevention, data compression, data structuring, encryption of stored data, flawless validation, and user convenience. It also extracts, converts and stores big data automatically from hidden web pages, along with a powerful web collection. TORNADO is the most powerful big data collection engine globally that can collect social big data such as news, RSS, Twitter, Facebook, Weibo, etc.

< big data collection engine concept map >

Main Features

- Built-in features for collecting various bigdata

Various collection features (collection based on user scenarios, RSS collection web collection, collection deep web, social collection, collection based on OpenAPI) are built-in for various types of internal and external big data collection following user’s needs.

- Collection rule editor (workbench) is built in to ensure data extracting performance.

Through a web-based collection rule editor that considers users’ usability, the collection rule editor is built to easily extract and collect data from various types of dynamic websites such as JS and AJAX.

- Supports various OS systems such as parallel distributed collection

It can simultaneously collect a large amount of data using various set rules much faster and more stable through the distributed parallel method. It can also be installed and operated in multiple operating systems (UNIX, Windows, etc.).

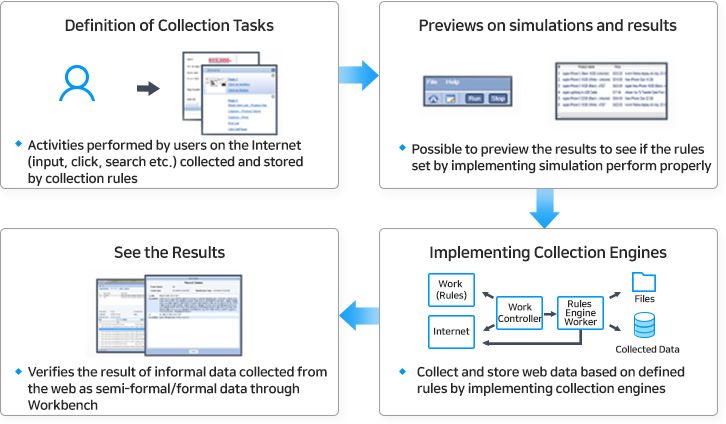

- Collection simulation and user collection preview features

For user convenience, it provides a feature to confirm the quality of the data collected by data collection simulation in advance with previously generated collection rules through preview before collecting the user data.

- Easily and conveniently managed management tools

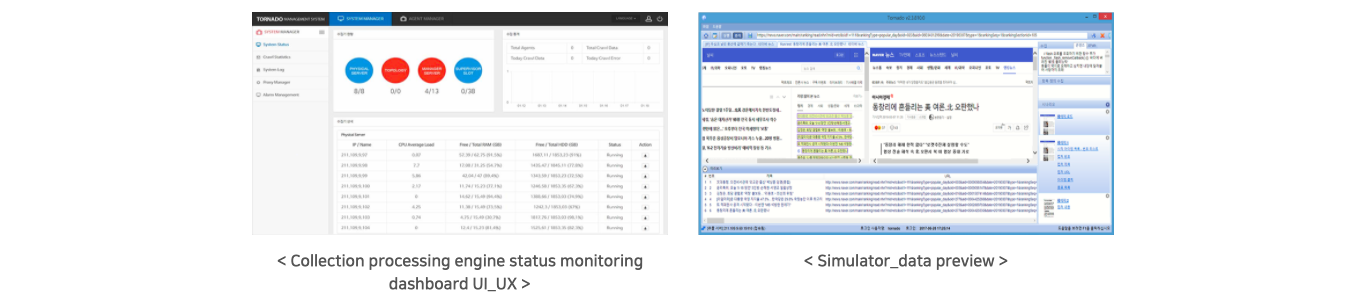

The operator/manager canto easily and quickly check the current status through an integrated dashboard, which could monitor the overall condition of the collection engine, and an operation management tool, which could monitor the collection policies and schedule settings per collection source in real-time.

Main features and specifications

To handle various types of internal and external data collection processes required for intelligent integration analysis of structured big data, Big Data Collection Engine (Tornado) provides collection functions such as user scenario based collection, RSS based collection, deep web collection, metasearch collection, social media collection, OpenAPI collection. The collection engine’s internal simulator can be used to verify if the user-defined collection task is carried out as intended. During actual operation, it provides a scheduling function, status monitoring function, and operation manager function to monitor the collection result in real-time.

< Collection engine operation procedures >

- Scenario-based collection feature

Based on user scenarios from various sites such as news, blogs, shopping malls, and general homepages, data about the collection target is extracted and collected. It provides a scheduling feature to set collection cycles and a status history feature to view collection status within the workbench.

- Deep web collection feature

It could easily collect the information within websites by collecting site-wide information based on URL or filtering with URL patterns or keywords. It also provides the scheduling feature to set the collection cycle and the status history view feature to check the collection status.

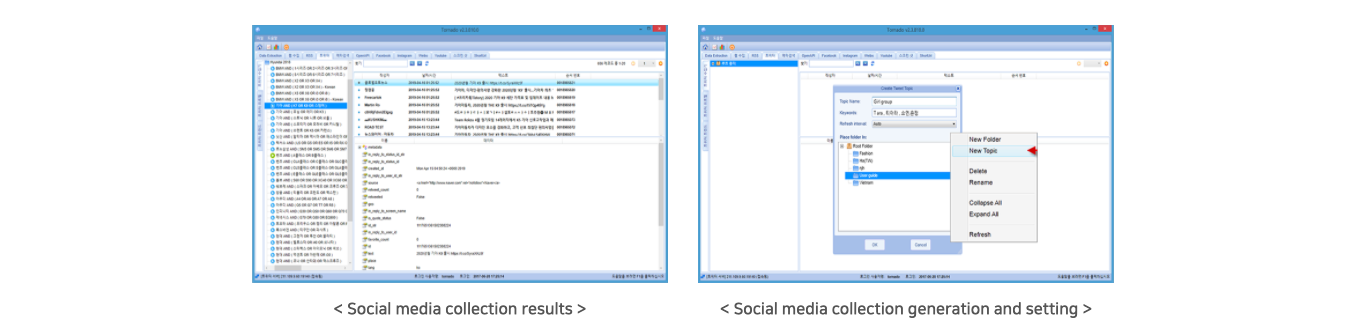

- Social media collection feature

It has a scheduling feature that allows you to collect multiple types of social data, such as Twitter, public Facebook pages, and Weibo timelines, and set the collection cycle target. It also has a status history view function to verify the status.

- Collection engine operation management feature

① Operation management feature – status monitoring dashboard feature

② User(manager) management feature

③ Management feature per collection target (project)

- RSS collection feature

It provides a feature to read RSS (Really Simple Syndication) feed and extract the data within the collection target feed and original data. It includes a scheduling function that could set the collection cycle and collection status history features in which users can check the collection status even in the workbench.

- Open API-based collection feature

It provides a scheduling function to easily collect various documents and open data, including domestic public data, overseas public data, local government public data, while also set the collection cycle target. It also provides a collection status history view function to verify the status.

- Metasearch collection feature

It has a keyword-based collection feature that sends user keywords to various search engines, including Google, Bing, Daum, Naver, and Yahoo, to consolidate search results into a single list. It also provides a scheduling feature to efficiently collect and set the collection cycle for the collection target and a status history view feature to check the status.

Main engine screen