Speech Synthesis Engine TTS

Speech synthesis, or artificially synthesizing human voices, is sometimes referred to as text-to-speech (TTS) for its ability to convert from text to speech. Saltlux AI Suite’s speech synthesis engine learns human voices from given sentences and artificially creates human voices with tone and intonation similar to humans. In particular, speech synthesis can be created with the real-time learned voices of specific individuals or domains, along with the pre-learned voices. The speech synthesis engine can be used for various AI services by providing these learned models as separated services through End-Point.

Main Features

Natural and fast speech synthesis

Recently, many speech synthesis products were designed to synthesize voice through deep learning and make up for the shortcomings in existing methods. However, it isn’t easy to satisfy both quality and performance requirements. Saltlux’s speech synthesis engine uses the Tacotron model, which aims at secure performance during learning, and the Hybrid-Tacotron Deep Learning Model, which applies Tacotron2 to ensure the quality of natural speech synthesis in transition learning. This learning method learns human voice data to provide stable voice quality and high speech synthesis quality. In addition, the speech synthesis process is put in parallel to obtain a speed at which commercial services can be implemented.

Highly efficient personalized speech synthesis

Saltlux’s speech synthesis engine uses a transfer learning method in which the engine additionally learns speech data from an existing, well-trained model. The transfer learning process allows the speaker’s voice to be synthesized with learning data for only about 30 minutes. This creates the advantage of high efficiency since it reduces the cost of large-scale voice transcription operations.

Domain-specific Korean notation conversion and speech synthesis

Most Korean speech synthesis engines have difficulty synthesizing speech for non-Korean pronunciations such as English words, numbers, and units. This limits the ability to generate a quality speech synthesis service. Saltlux’s speech synthesis engine provides conversion features for smooth conversion of non-Hangul notations and phonetic symbols for English words.

Main features and specifications

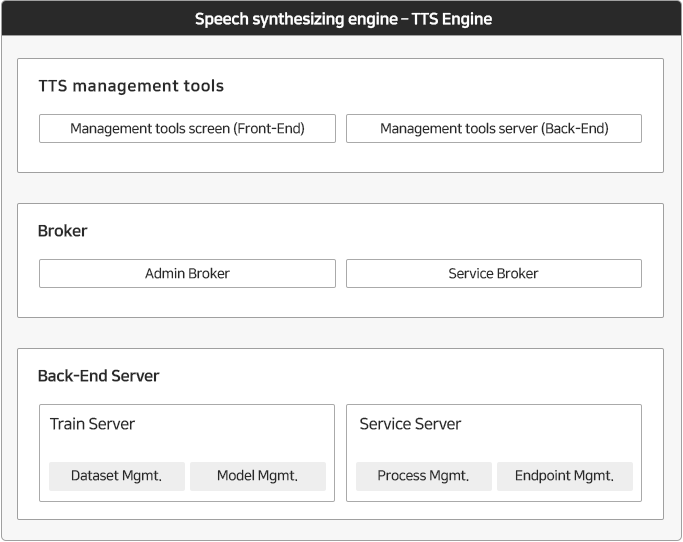

The functions of the speech synthesis engine can be divided into two areas, learning management and service management. The learning management area creates or manages a new speech synthesized model by learning specific voice data. The service management area is responsible for turning the speech synthesis model learned through the engine into service, then distributing and managing it so that other service applications can access and use it.

< Speech recognition engine system construction >

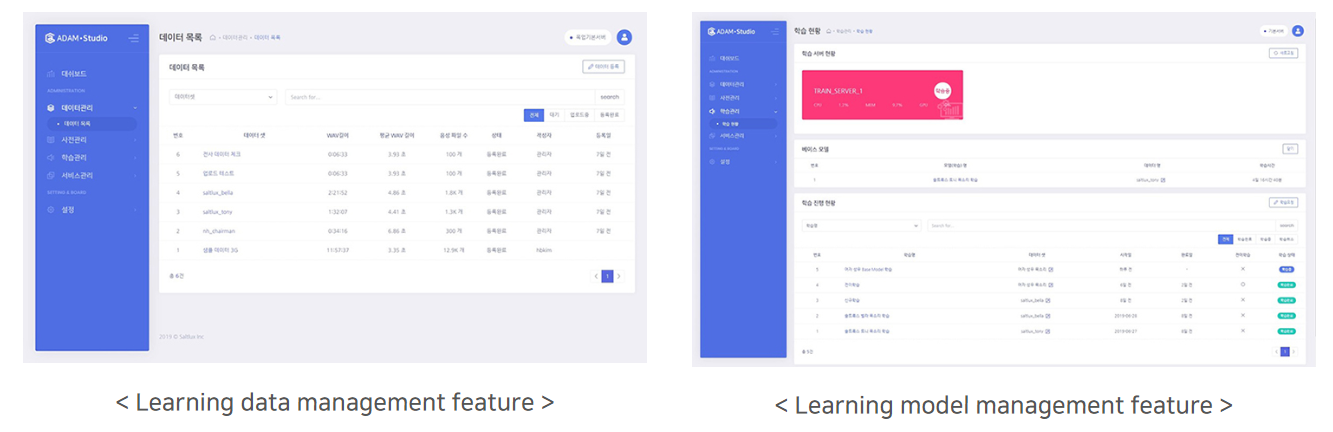

Learning data management

It provides the function to register and manage learning data needed for speech synthesis learning. Learning data is comprised of a voice file and a transcription file that contains the contents of the voice file. When learning speech synthesis models, it can upload data sets in various lengths, volumes, and speakers and apply them selectively.

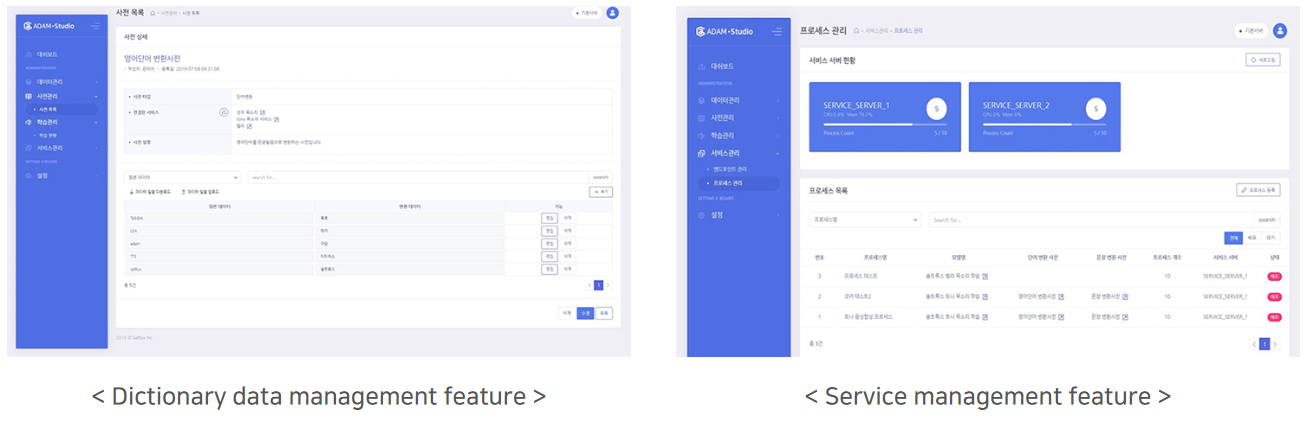

Dictionary management

We utilize language dictionaries to preprocess text sentences input in speech synthesis. It can register and manage words that need to be converted in advance or terms whose pronunciations vary depending on a specific domain. It can set up and apply different dictionaries for each service.

Learning Management

You can learn the speech synthesis models through learning management features. Selecting the learning data and adjusting the parameters necessary for learning can generate a desired speech synthesis model or perform transfer learning by adding to the previously learned model. This feature allows users to control the progress and quality of the model, maintain a list of numerous versions of the learned model, and distribute it to the needed services.

Service management

Speech synthesis is commonly used by calling APIs in other service applications that need speech conversion features. The service management function creates and manages a RESTful-based service interface that provides speech synthesis. Through the service management function, you can set specific models and system resources or create and deliver an End-point that allows other services to connect the model.

Main Features

Saltlux uses transfer learning technology to realize speech synthesis services personalized for customers. Transfer learning is a method to learn a new model using existing, well-trained models with similar problems. Since transfer learning increases the learning efficiency of the new model, it can achieve high performance by fine-tuning the pre-learned model in a meaningful way, even with a small amount of data. By basing itself on the A model, which is well-learned by a sufficient amount of data, the transfer learning can learn the B model, which has insufficient data.

Suppose there is a big difference between pre-learned model A and model B distributions, the speech synthesis performance will be greatly reduced. To solve this data mismatch, Saltlux adds a Semi-Supervised Learning method that pre-learned only a portion of the speech synthesis network by using human voice data gathered for tens of hours. Semi-supervised learning is carried out with voice data only, without transcription data, similar to humans learning to speak before they learn to write. Saltlux applies a semi-supervised learning model and the transfer learning method to speech synthesis, thus dramatically reducing learning time and maximizing speech synthesis performance. Saltlux’s speech synthesis engine is now capable of generating high-quality voices by learning a specific person’s voice with 30 minutes of voice data.

Main engine screen