Image recognition Engine

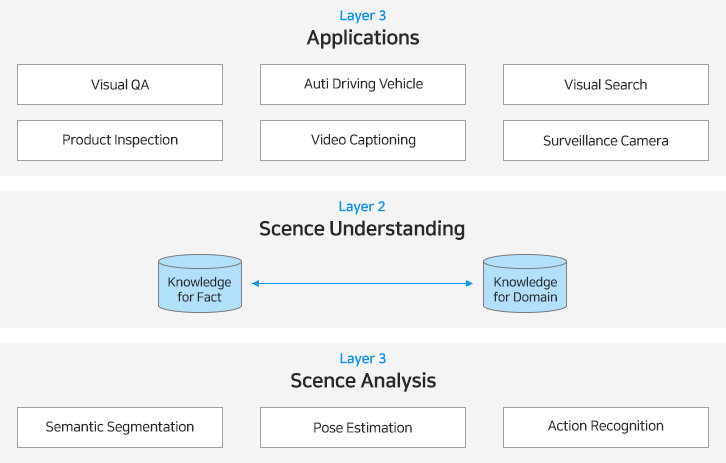

Saltlux’s AI suite image recognition engine recognizes the image’s objects and classifies them based on the recognized result. The image recognition engine supports future applications such as visual-based search, video captioning, autonomous driving, and visual Q&A. Saltlux intends to improve the present image recognition engine to the point where it understands the meaning of the scenes in the image, rather than just explaining the visual, to accomplish the technological stack depicted below.

< Image recognition engine technology stack >

Main Features

- More detailed and accurate image understanding using the knowledge graph

During the process, the image recognition engine uses the knowledge graph to understand the meaning of the image through object recognition. Unlike conventional image recognition solutions that merely learn photos tagged with words or sentences, it can be linked with a knowledge graph based on meaning to enable more detailed and accurate image understanding.

- Domain-specific image understanding

Interpretation criteria may vary depending on domain knowledge. The knowledge graph-based image recognition engine can be linked with individual knowledge graphs built for various domains, thus producing image understanding results specific to each domain’s knowledge.

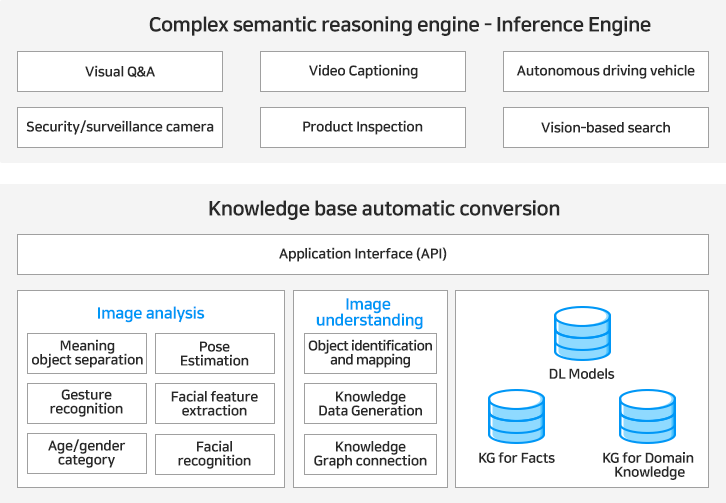

Main features and specifications

The image recognition engine processes images from cameras in real-time. Later, it detects the situation and passes the information on to applications. In this case, it may include a visual analysis module to analyze various aspects of the image and a visual understanding module to comprehend the situation using the studied data.

< Image Recognition engine block diagram >

- Semantic Segmentation

Along with the development of the image recognition technology used to analyze photographs and recognize the kinds of objects, the object detection technology to find out the location of recognized objects is also developing. Semantic Segmentation determines to which objects all the points in an image belong. It is used to define each object’s boundary (line) along with the exact range.

< Object recognition and object segmentation examples >

- Pose Estimation

Pose estimation is a technique used to detect and measure the position of human anatomical key points, such as head, neck, shoulder, knee, etc., and determine the object’s state or estimate its posture.

< Pose Estimation examples >

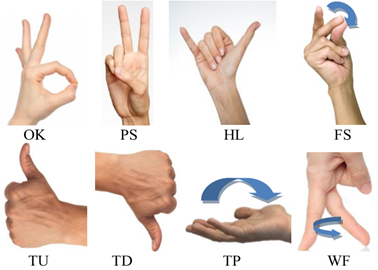

- Hand Gesture Recognition

Hand Gesture Recognition is a feature that extracts and recognizes meaningful gestures from sources such as video and motion of visual data acquired using a camera. Usually, hand gesture recognition analyzes hand poses to identify a defined category of hand gestures. Gesture Recognition detects hand movements such as clicks and scrolling and interprets its meaning without direct input.

< Hand gesture recognition examples >

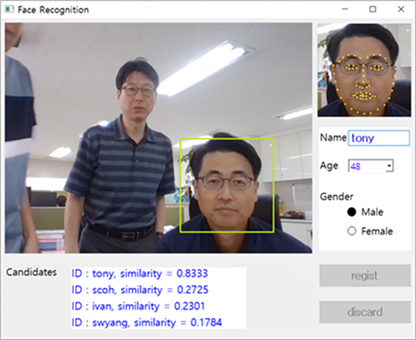

- Face Landmark Detection

Facial feature extraction detects and tracks key facial features (eyes, nose, mouth, jawlines, eyebrows, etc.). This allows you to correct facial deformations of rigid and non-rigid bodies due to head movements and facial expressions, thus understanding facial expressions.

< Facial feature extraction and validation examples >

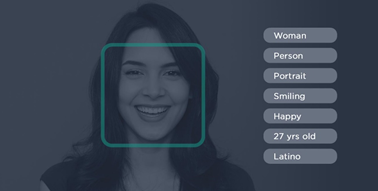

- Age-Group/Gender Classification

This feature recognizes a person’s face in the image, then classifies and estimates the age or gender of the person. In addition, it can identify additional information such as facial expression, emotional state, race, etc.

< Age/gender category example >

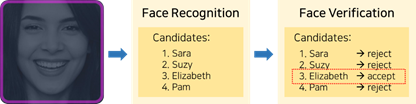

- Face Recognition and Verification

This feature identifies the person through face recognition. Face Recognition is the task of recognizing a human face in an image and identifying to whom it belongs by comparing it with pre-registered face information.

< Face recognition and verification examples >

Meanwhile, Face Verification is the task of verifying whether a face matches one of the pre-registered faces found in the image. Since there are many errors in the Face Recognition process, as shown in the Dnl picture, the image recognition engine uses Face Verification in the post-processing step to correct them.

- Image understanding

The image recognition engine includes a process using the knowledge graph to understand the meaning of the identified image. In addition to general facts, it can provide specialized image understanding results, which can be interpreted differently depending on the domain knowledge.

① Knowledge Graph for Fact

It is a method to present knowledge in which two objects and their relation in the phenomenon are presented in a triple combination. The Visual Genome Project, led by Stanford University in the United States, is also building a dataset in which detailed information obtained by analyzing photos is expressed as KG. The image understanding module of the image recognition engine uses KG for facts to express various analysis results obtained from the image analysis module.

② Knowledge Graph for Domain Knowledge

To better understand the situation through images, you need to consider not only facts but also a variety of knowledge related to them. More specifically, in an application, domain knowledge related to the object is necessary to understand each respective domain specifically. For this purpose, knowledge specific to a particular domain can be expressed as KG for each domain in the image understanding module. This knowledge can be combined with KG to provide facts in each application service.

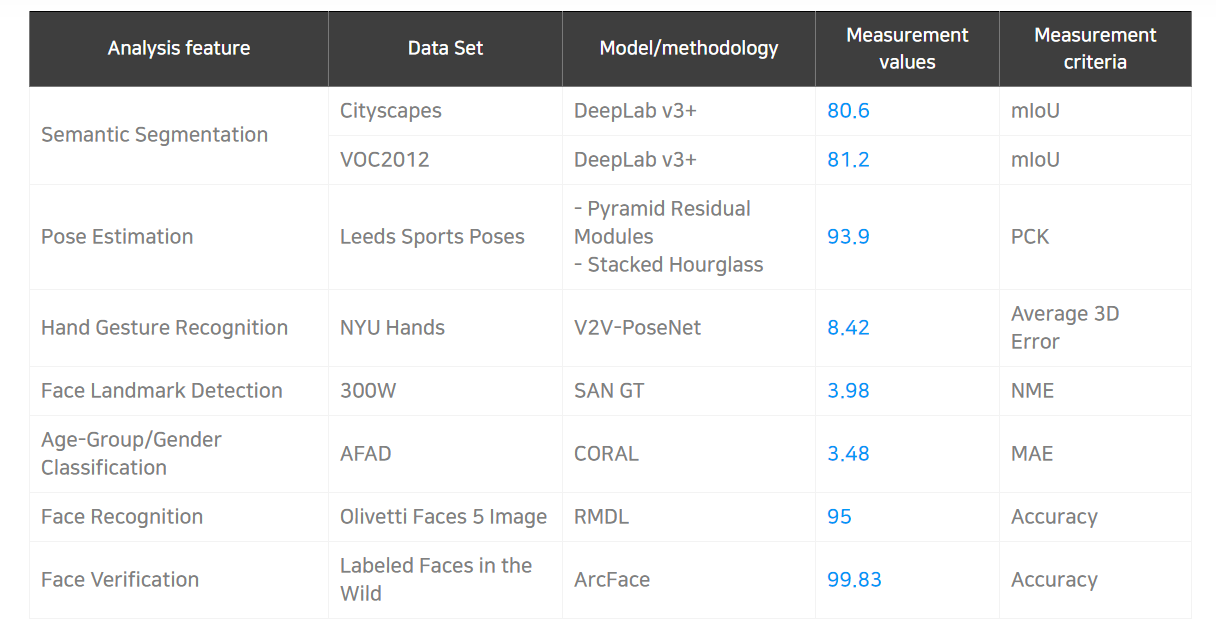

Main Features

Each of the image recognition engines features described above is under constant research and development. The status of the art (SOTA) is shown in the following table.

< Status-Of-The-Art per image recognition feature >