Speech Recognition Engine STT

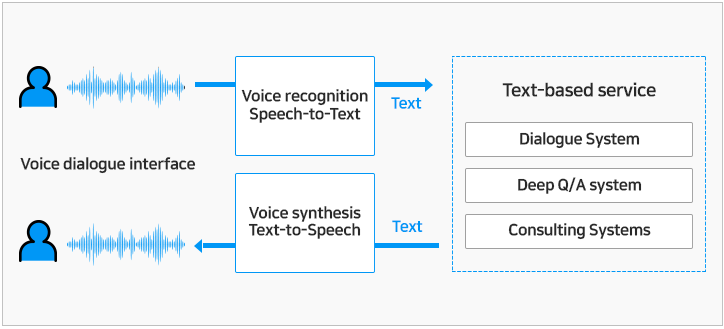

Speech Recognition, also known as STT (Speech-to-Text), refers to the process of a computer interpreting a speech uttered by a person and converting it into text. The Speech recognition engine is a system that can be used in various services based on a speech interface.

Saltlux AI Suite’s speech recognition engine has been pre-trained with a large amount of data. In addition, the transfer learning method used for quick application to a specific domain makes it possible to provide high-quality services with only a small amount of data.

< Dialogue interface-based service construction >

Main Features

Deep neural network-based speech recognition learning

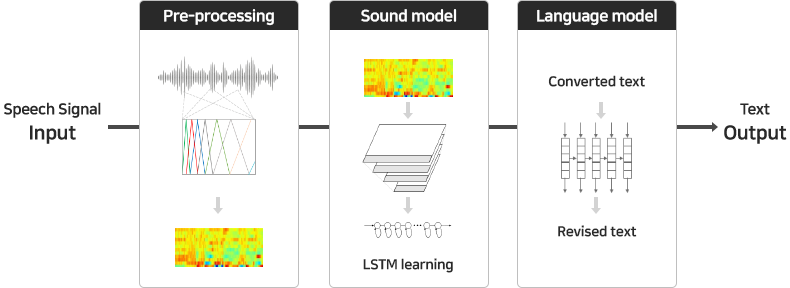

AI Suite’s speech recognition engine is based on acoustic model adaptive learning sophisticated by Deep Learning. It is based on a baseline acoustic model with Long Short-Term Memory (LSTM) technology, which is more advanced than the commonly used HMM (Hidden Markov Model) or conventional Fully connected DNN-based (Deep Neural Network) acoustic model.

< Deep neural network-based speech recognition learning summary >

Large-capacity multilingual speech DB learning

Saltlux has its own multi-speaker speech data for different situations for various languages. The AI Suite’s speech recognition engine is built with multilingual speech recognition and high-quality basic speech recognition models trained with these multilingual speech data. This makes it possible to provide high-quality speech recognition services.

Main functions and specifications

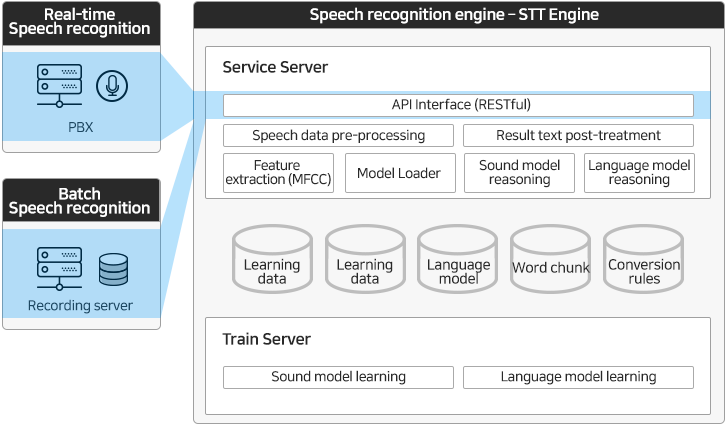

Speech recognition engine can be divided into RESTful-based speech recognition and learning management of sound models and language models. Speech recognition service provides speech recognition results by preprocessing input speech data, feature extraction, text conversion, and result correction. In addition, learning management is a learning data of speech-text. It performs learning regarding acoustic models and language models.

< Speech recognition engine block diagram >

Speech recognition service

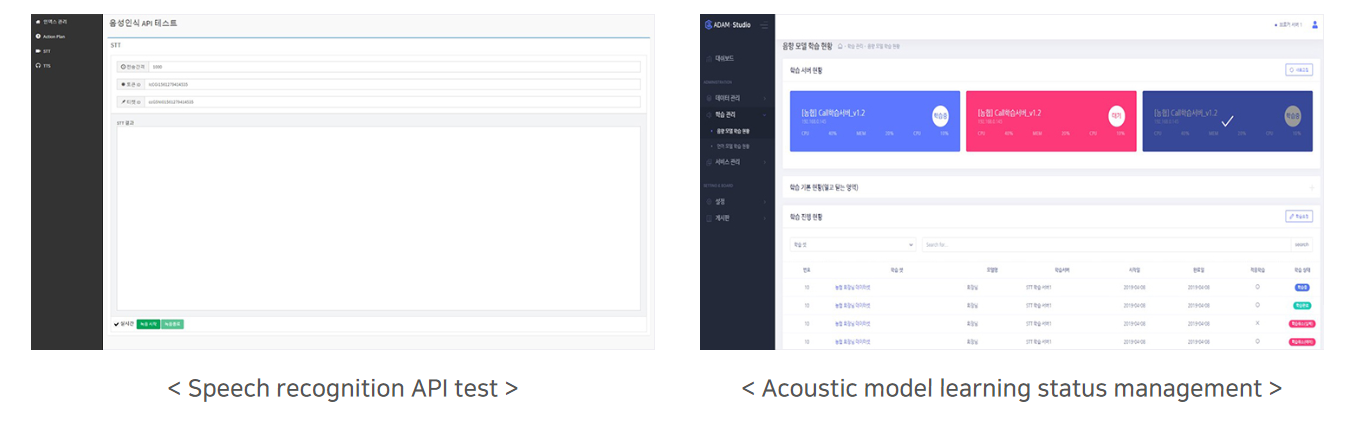

Speech recognition is typically used by calling APIs provided by speech recognition engines in other service applications that require regular speech recognition functionality. The speech recognition engine provides speech recognition functionality through the RESTful End-Point API service. The service application using this tool can be accessed regardless of the system environment, and various STT-based AI service implementations can be implemented using the provided functions.

Acoustic model adaptive learning

Speech recognition turns speech data into text using a pre-learned model: Acoustic Model (AM) and Language Model (LM). AM learns by statistically modeling the acoustic properties of the speech data. In AM, adaptive learning is made possible by adding a speech property using a baseline model provided by the speech recognition engine. Adaptive learning can be performed on existing baseline models by using recorded speech data or transcription data collected in specific fields (call center, etc.) as learning data. The acoustic model learned by Long Short-Term Memory (LSTM) provides better speech recognition performance than HMM and DNN. It can provide specialized speech recognition functions specific to the field.

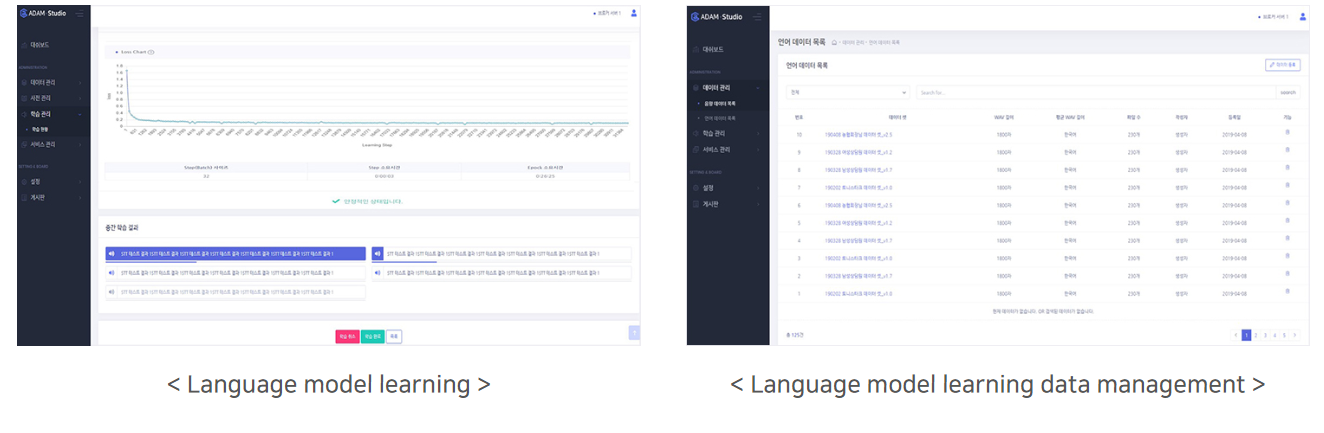

Language model learning

Reflecting the characteristics of linguistic expressions used in specific fields (financial, call center, etc.), it By reflecting the characteristics linguistic expressions used in specific fields (financial, call center, etc.), the engine can provide speech recognition functions specific to the service with better quality through language model learning. LM learns grammatical rules such as vocabulary selection and structures of the sentences that are converted to text. It can collect a large number of corpus and learn them statistically, or define arbitrary rules using a formal language.

Providing a high-quality speech recognition model

The acoustic model and language model provided by the speech recognition engine includes a baseline model that ensures high performance through 1,200 hours of learning Korean data.

Main characteristics

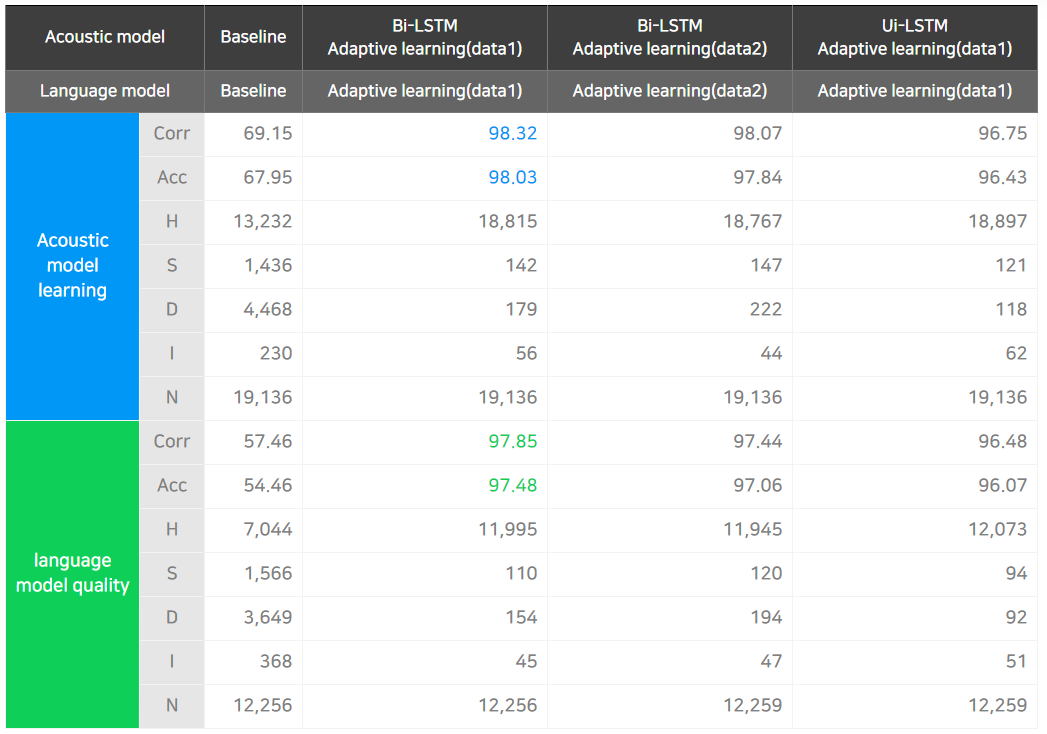

The following table shows the results of adaptive learning-based speech recognition quality evaluation.

Corr (Correct) is the number of correctly recognized syllable units.

Acc (Accuracy) is the number of correct answers that were given insertion and deletion errors.

H (hit) is the number of correctly recognized words.

D (deletion) is the number of cases recognized as silence.

S (substitution) is the number of cases that were recognized as a different word.

I (insertion) is the number of cases in which silence was recognized as another syllable.

In the case of the baseline coming before adaptive learning, both the acoustic and language models had a correct answer rate of 70% or less. But after adaptive learning, the rate rose to 97% for both models. The developed speech recognition technology is utilized in various environments, such as speech recognition, text analysis, and callbot systems building.

< Quality evaluation of adaptive learning-based speech recognition technology >

Main engine screen